The advent of Neural Processing Units (NPUs) has revolutionized the field of machine learning, enabling the efficient execution of complex mathematical computations required for deep learning tasks. By optimizing matrix multiplications and convolutions, NPUs have greatly enhanced the capabilities of AI models across various domains, from server farms to battery-operated devices.

The emergence of TinyML (Tiny Machine Learning) has further pushed the boundaries of AI, focusing on implementing machine learning algorithms on resource-constrained embedded devices. TinyML aims to enable AI capabilities on billions of edge devices, allowing them to process data and make decisions locally and in real-time without relying on cloud connectivity or powerful computing resources.

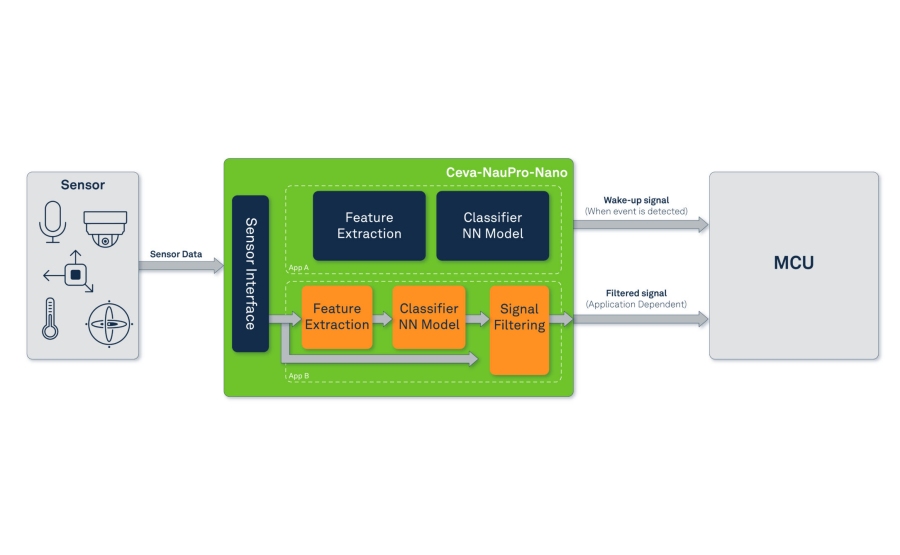

Building on the foundation of NPUs and the emerging field of TinyML, Ceva has introduced the groundbreaking Ceva-NeuPro –Nano. This compact and efficient NPU IP has been meticulously designed with TinyML applications in mind, offering an unparalleled balance between performance and power efficiency. Ceva-NeuPro-Nano’s unique architecture is optimized for running complete TinyML applications end-to-end, from data acquisition and feature extraction to model inferencing, making it an ideal self-sufficient solution for resource-constrained, battery-operated devices.